Is the rise of AI a threat or an opportunity for devs? Many fear that AI will replace human software engineers or make it harder for junior programmers to break into the field. But what if the truth is quite the opposite? As someone in the business of helping people switch careers and learn coding through my website junior.guru, I believe that in the age of AI, junior devs may actually hold the winning edge.

Hype aside, here are some facts: AI has the potential to make previously unscalable tasks scalable and seemingly impossible tasks achievable. Unlike recent hypes like blockchain, people can immediately recognize the value that AI tools provide. Moreover, the cost of using these tools is often free or reasonably cheap. Almost anyone can come up with tasks where AI can be beneficial, including your hairdresser. This has the potential to be a game-changer, akin to the impact of calculators, cars, personal computers, or smartphones.

How smartphone augments my abilities

Let's take a closer look at how the latest of these inventions has changed our lives.

When I get lost in the streets and look at my iPhone, I can immediately see exactly where I am. I can look up the closest ATMs and walk to one of them. I can use contactless Apple Pay to withdraw some cash, and that's just a fraction of what the phone can do. It augments my abilities in such a way that my grandfather, in his 30s, would think it's all just pure sorcery.

At any place, at any moment, I have easy access to most of the information collected by humans, in all its breadth and depth. In contrast, my grandfather had a limited set of books, maybe a radio. But I have the internet, Google, Wikipedia, Stack Overflow, and so much more.

I'm also way more ambitious with what I work on. With all the parallel conversations, tasks, and projects, I'm probably 100 times more effective and productive than my grandfather. I don't say this is only good; fetishizing productivity and trying to get more done in every second of our lives has its own problems, but the fact is that thanks to technology augmenting my abilities, I can do more than my ancestors.

My smartphone or personal computer also compensate for my limitations. I'm so bad at math that I can't do 12 + 42 in my head without an embarrassing amount of effort. So I type even simple stuff like that into the calculator (to be precise, into the Spotlight or Google search fields). I might feel silly doing it, but at the same time, I know I can rely on it. Anytime. Thanks to that, I'm not afraid of adding. Not even multiplying! And with WolframAlpha, I'm downright invincible.

AI stands for 'new cool tool'

Now, back to AI. Today, the terms AI and ChatGPT are often used interchangeably, but it's important to understand what AI actually means. Essentially, every time humans invent something that allows computers to do tasks they couldn't do before, we call it artificial intelligence.

AI has been playing against us in single-player games since the early days of computers. It's behind the face detection in our cameras for better photos, the translations in Google Translate, and the spell check in Google Docs, to name just a few examples. AI is already all around us in various forms. As we become accustomed to its presence, the AI label shifts to something newer, which is more wow. This is called the AI effect.

Simon Willison describes LLMs like GPT as calculators for words, and I find this analogy to be useful for predicting the future as well. We will learn how to use these "calculators", adapt to them, get bored, and eventually move on to something new.

The first iPhone was released in 2007, when I was 20 years old. While smartphones have revolutionized many aspects of my life and given me seemingly unimaginable superpowers in the eyes of my ancestors, today the device is no longer wow, it's boring.

With my tasks and projects, I have naturally transitioned to a higher level of abstraction. To find something, my grandfather had to rely on paper maps, understand how to locate himself on the map, and comprehend the intricacies of map usage. Today, I can simply type "kebab" into Google Maps, tap on an arrow, and a voice tells me where to go. I can focus on the task at hand and forget about the details.

LLMs are the next level of augmentation

Some people say LLMs (large language models) make mistakes and that's why they're downright useless. But in this world, nothing is perfect. Humans are okay doing some extra steps if there's a tool that's just good enough. If it solves 80% of the problem with 20% of the effort, great! This is true for cars, phones, planes, computers, all software, and it applies to LLMs, too.

In just a few months after the ChatGPT launch, we now have GPT-4, which is much better, and plugins, which further revolutionize the game. Still, as of now, asking ChatGPT to return facts or other exact information isn't the best way to use it. However, it is already an amazing tool for mentoring, learning, and brainstorming.

Instead of blindly copy/pasting the code that ChatGPT generates, go through it line-by-line, and make sure you understand. Ask it for clarification. And double-check things that seem suspicious with an authoritative source (eg. the official documentation). Keep in mind that LLMs are 100% confident, but not 100% accurate.

See how last December, Simon Willison used ChatGPT and GitHub Copilot to learn Rust. I've seen people telling the LLM to behave like a tutor who never answers directly but guides you to come up with the solution yourself by giving you questions and little hints. This can be further enhanced with links to relevant docs and additional explanations. Despite its imperfections, it's already very, very useful!

Junior stands for 'less experience'

Some say that ChatGPT or GitHub Copilot produce code that is already similar to what an entry-level software engineer would write. They conclude that this means junior devs won't be needed anymore.

First of all, being a junior is just a career phase. People try something, get better, become juniors, get better, become seniors. Tools can make the transition easier or faster, but they cannot replace juniors specifically.

As far as I know, AI can't clone people yet. Although I've seen many companies neglecting the truth and spending immense money and resources on hiring seniors instead of nurturing junior talent, the only way seniors can really reproduce is by teaching those who are less experienced.

It may seem obvious, but it apparently needs to be stated: there are no seniors without juniors. Juniors are the future seniors, and all seniors were once juniors.

As I've mentioned, AI already makes the transition from junior to senior faster and easier. However, it cannot eliminate the difference between individuals with varying levels of experience in the job. If the difference ceases to exist, it would imply that the job is unnecessary altogether. And I don't think that AI will render all devs obsolete (more on that later).

Why junior devs have the best timing

If there is a new tool augmenting our abilities and learning is suddenly faster and easier, what's the outcome? Better juniors and seniors!

This is a bit silly chart, but you probably get how I think about it. It's gonna be the same as with personal computers. The accountants still exist, they just transformed into personal computer operators.

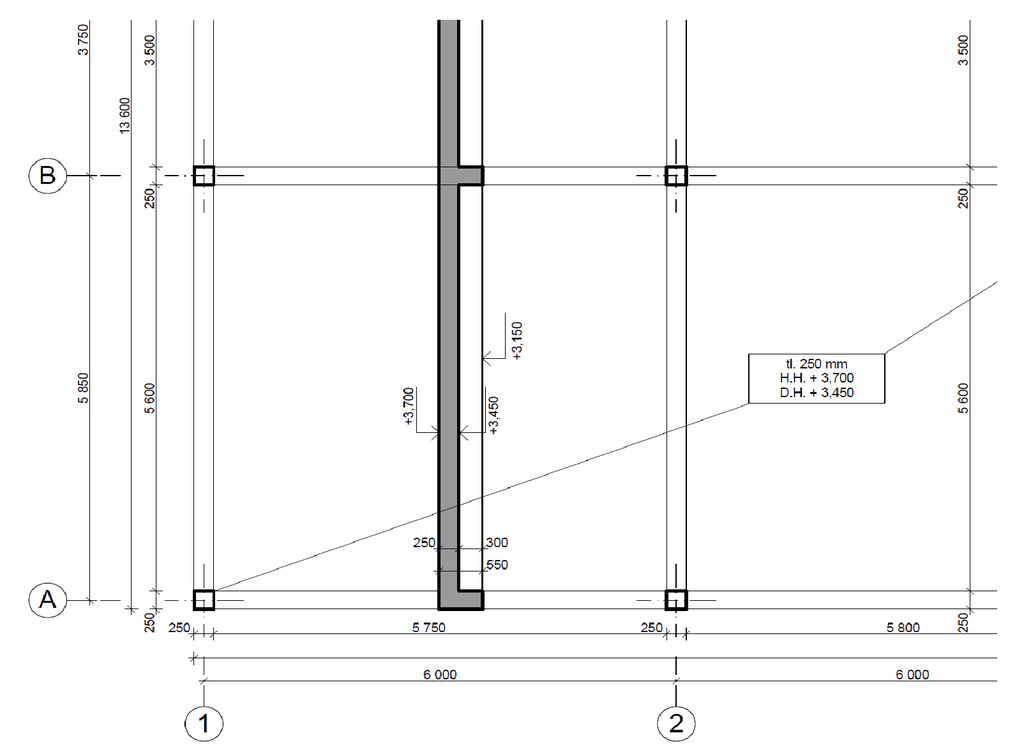

Do you see the lines, numbers, and letters? The architects used to draw all this manually. Today they have AutoCADs. The advent of software for architects did not render junior architects obsolete; rather, it elevated them to a higher level of abstraction in their work. They can now skip technicalities and focus on the task at hand. And perhaps thanks to that their teams can pursue more ambitious projects, such as organic architecture, which goes beyond straight lines and right angles.

The AI revolution will disrupt the job market, but not necessarily in a way that makes junior developers obsolete. Depending on the pace of change, companies will gradually or suddenly start favoring individuals who are fluent with the new AI superpowers, similar to how they once favored those who were skilled in MS Word 97. Do you recall the era when "computer skills" were highlighted on CVs, along with computer courses for adults?

Those who were studying accounting or architecture at that time were in a prime position. They had to do everything on paper during their studies, which may have been tedious if they had a sense that computers could automate those tasks. However, in their careers, they became the first operators of personal computers in their respective fields. As for those who were already established in their careers, they had to learn and adapt to computers, which became the new norm.

The more savvy among beginner devs are already utilizing AI as their mentor. They won't have to alter their habits, routines, tools, or approach. They won't need to abandon their perfectionism and meticulous code crafting, as they are already accustomed to scanning through larger suggested code chunks with suspicion. They won't need to learn how to prompt ChatGPT or GitHub Copilot. For them, it will be natural. Soon, some juniors skilled in augmenting their capabilities with AI may even be preferred over senior devs who are resistant to working with these new tools.

This is a true revolution. The old class system is collapsing, and a new one is about to be established. Those who quickly learn how to adapt will benefit from the shake-up, achieving successful careers and becoming respected authorities. On the other hand, those who cling to their old ways will be pushed aside.

AI will not replace you. A person using AI will.

You may think this doesn't apply to you, right? It's about that old fart daily commuting to a cubicle in a big office building, working on some Java 8 systems. But no, all seniors are inflexible. Python 3.5, which introduced type hints, was released in 2015. First time I tried using them in my project? Few weeks ago. It took me 8 years to just try them.

I'm not worried that AI will replace juniors. I'm afraid that juniors, augmented by AI, may eventually replace us seniors.

Closing the university gap

I already mentioned that I help people learn coding and find their first job as a dev. While I'm happy to support computer studies graduates in their efforts, my main focus is on those who are switching careers from a different job.

The usual approach for career switchers is to bet on one of the most in-demand programming ecosystems, such as frontend or Python, and then laser-focus on learning it. Once they are able to produce useful code, they apply for entry-level jobs. However, as they progress in their careers and become vital members of engineering teams, they may realize that they still lack some knowledge compared to those who studied computer science at university.

Everyday React components? Sure, no problem. But then some tasks require a deeper understanding of operating systems, algorithms, databases, and perhaps even compilers. In such cases, career switchers may either attempt to reinvent the wheel or admit that they don't know how to approach the problem and ask for guidance.

No matter how well-prepared university graduates were for their exams, they have been exposed to numerous subjects and concepts. While they may not remember all the details, they have a sense that solutions for certain problems already exist with specific names. Even if they don't recall them precisely, they can rely on Google for help. They may tap into an old memory, search for "philosophers fork algorithm", and find the Wikipedia page for the Dining philosophers problem to read about the solutions. They have general overview of the field and they know what they don't know.

Students will understand all the layers of the computer based systems including hardware (semiconductor components, logic networks, processors, peripheral devices), software (control and data structures, object orientation, programming languages, compilers, operating systems, databases), as well as their common applications (information systems, computer networks, artificial intelligence, computer graphics and multimedia). They will understand foundations of computer science (discrete mathematics, formal languages and their models, spectral analysis of signals, modelling and simulation). Graduates will be able to analyse, design, implement, test, and maintain common computer applications. They will be able to work efficiently in teams.

The issue with career switchers is that they are often unaware of what they don't know. This means they struggle to identify what to look for and what questions to ask.

I believe this is, in essence, a problem of information discoverability. In the past, schools focused on memorizing facts to provide us with a general overview. Nowadays, schools are more relaxed, but still expect us to have a basic foundation of facts memorized. The data points we remember give us some guidance, and we can fill in the gaps just-in-time using the internet.

While we have access to Google and Stack Overflow, when we are trying to solve tasks, we still need to know what to search for in order to augment our abilities with existing knowledge on the topic. Conducting our own research can be tedious, as it is challenging to distinguish between noise and signal without reliable sources.

Asking questions in the right way can also be tedious. Effectively using Google is a skill that juniors need to be trained in. Asking questions on forums can be tricky as well. The classical guide on how to ask questions on the internet without angering those being asked is 65,825 characters long.

Let's say I want to efficiently display billions of pins on a Leaflet map. Basic tutorials are easy to read, but won't take me far enough. Hardcore academic papers, on the other hand, are hard to read (especially on a phone screen with two-column PDFs) and may go into too much detail. Moreover, my task may have some specific requirements that make many existing resources irrelevant or difficult to apply.

That's where LLMs, like ChatGPT, come in as the missing piece. We can simply ask this calculator for advice on how to efficiently display billions of pins on a Leaflet map, and the ensuing conversation can provide us with various existing approaches and their names.

Another example. You know some Python and want to write a program that detects the beats per minute (BPM) of songs or do other cool things with sound. Instead of spending a week on research, a single conversation with LLM can inform you about concepts like Fourier transforms and what they entail. It can help you to use Wolfram Alpha to calculate what you need and explain how these concepts are relevant to your specific project. And if you ask nicely, it can explain everything to you in simple terms, like you're five.

This is big! The availability of tools like ChatGPT is closing the gap between graduates and career switchers in terms of gaining a general overview. While there may still be differences in other areas, such as opportunities, contacts, and networking, when it comes to solving challenging tasks, the divide is narrowing.

The education system in my country has taken decades to acknowledge the existence of the internet. This is yet another blow to its outdated, 19th-century-like structure. It's high time for our society to truly contemplate what schools should be focusing on.

Since ChatGPT launched in Nov'22, @StackOverflow traffic dropped 24% from peak, 13% from avg. What other categories of websites - other than technical forums - got affected ? pic.twitter.com/rBctmGPPEW

Diversity and communication skills preferred

I also believe that tools like ChatGPT will revolutionize the skillset of developers. Nowadays, companies are increasingly valuing teamwork and collaboration skills over solitary genius traits. This shift can be challenging for some, as previous generations of developers were often individual geeks, and strong communication or teamwork skills were not prioritized in the job requirements. Those who fail to adapt may now be labeled as "brilliant jerks" and marginalized.

As noted by Simon Willison in his article In Defense of Prompt Engineering, crafting effective AI prompts requires excellent communication skills. Prompting for complex tasks can quickly become challenging without at least some background in linguistics, human psychology, philosophy, etc. In the case of image generation, those with expertise in digital art or art history have a significant advantage.

If technical skills alone are insufficient for devs in today's world, I believe this will be even more true in the future. The question then arises: is it easier to teach coding to communicative individuals with backgrounds in art or psychology, or to enhance the communication skills of professional coders? I believe the former is more likely, and career switchers have a significant advantage in this regard.

In our latest podcast episode (in Czech), Marián Kameništák also mentions that companies may start preferring domain knowledge over technical skills. We are already witnessing career transitions from accountants, artists, dentists, biologists, train drivers, bankers, chemists, and other diverse fields to the tech industry. These folks bring unique domain expertise as well as diverse backgrounds to the table.

We already know that solutions are better tailored to people's needs when they are developed by a diverse group of devs. Diversity in tech is not just about "women in tech", it goes as far as "single parents in tech", "remote mountain dwellers in tech," or "former dentists in tech". And I believe that in the era of AI-augmented software engineering, the importance of diversity in the industry will only become more apparent.

LLMs are not replacing all devs

But is it even worth learning coding anymore? What if AI becomes so advanced that it can not only generate basic "hello world" React components, but entire apps and complex systems? What if it becomes capable of understanding and maintaining all legacy codebases, ultimately making software engineers obsolete? Josh Comeau already wrote what I think about this, so let me quote from his article The End of Front-End Development (which is definitely worth reading in its entirety):

…there is an enormous difference between generating a 50-line HTML document and spitting out a production-ready web application. (…)

Even with 95% accuracy rate, this would be incredibly difficult to debug. It would be like a developer spending months building a huge project, without ever actually trying to run any of the code, until it was 100% finished. This is the stuff of nightmares.

AI isn't magic. It's only as good as its training data. Code snippets are all over the internet, and are often generic. By contrast, every codebase is unique. There are very few large open-source codebases. How's the AI supposed to learn how to build big real-world projects?

We're very quickly reaching the point where non-developers can sit down with a chatbot and crank out a small self-contained project, the sort of thing that folks currently use tools like Webflow to build. And that's awesome!

But I think we're still a very long way from major tech companies letting go of their developer staff and replacing them with prompt engineers. It seems to me like there are several potentially-unsolveable problems that stand in the way of this becoming a reality.

(…) My personal belief is that for the most part, working professionals will find ways to integrate this technology into their workflows, increasing their productivity and value. Certain tasks might be delegated to an AI, but not many jobs.

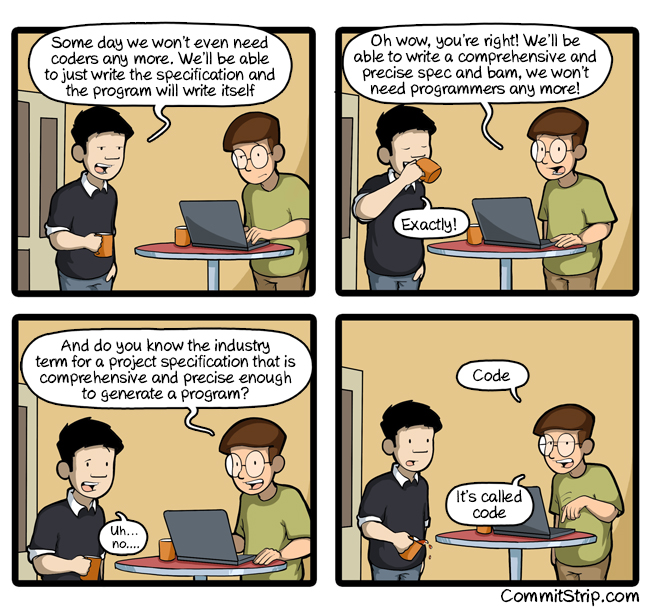

Moreover, I think that software engineering is about understanding peoples' needs and translating them to a very comprehensive and precise specification. And that spec is called code.

Code is the best representation of what should happen when, and cannot be fully replaced by vaguely worded prompts. While code initially emerged as a set of technical instructions for computers, since the advent of high-level programming languages we're gradually stepping up the abstraction ladder. I believe that today, a majority of the code written is closer to describing business logic rather than managing memory.

Code is transforming from being a contract between humans and computers to a contract between humans themselves. While LLMs can indeed augment our ability to draft these contracts, I hold the belief that the essential task of understanding people's needs and accurately translating them into that precise spec cannot be fully automated. We will continue to perform the same job, just at a higher level of abstraction.

Devaluation of devs

Let me repeat and point out this part I already quoted:

We're very quickly reaching the point where non-developers can sit down with a chatbot and crank out a small self-contained project, the sort of thing that folks currently use tools like Webflow to build. And that's awesome!

I see this already happening. My friend Jiří Chlebus is an excellent freelance designer, but his dream is to build his own product. For years he's been working on an app that disrupts graphic manuals, Visualbook.

He doesn't know how to code, so he was left with learning random bits from the internet, gluing no-code building blocks, and getting advice from friends. ChatGPT has changed everything for him. He can do things he didn't think he'd be able to do. Translating the app to multiple languages took him a fraction of the time, because ChatGPT is able to translate whole HTML chunks. It's dope!

What does this illustrate? AI not only makes the transition from junior to senior dev faster and easier, but it goes as far as democratizing coding for the masses. This is good for the progress of humankind, but will have consequences for devs. I see two possible scenarios.

Insatiable demand for devs

In one scenario, AI could potentially increase the supply of developers who are "good enough", which would help to balance out the seemingly insatiable demand. Such end of the scarcity would mean, first of all, drop of our high salaries. Perhaps also end to the elevated celebrity status of our occupation. This would be good for the society though, as developing software becomes easier and cheaper.

Although we are currently experiencing an economic downturn, I believe that this is just a temporary detour caused by current geopolitical factors. According to a 2018 poll conducted by the Czech Statistical Office, two-thirds of Czech companies reported difficulties in filling IT roles. Furthermore, the State of European Tech survey in 2018 revealed that IT is growing five times faster than any other segment. In 2021, the same survey projected that a staggering $100B has been invested to European IT in just one year, which is ten times more than when the survey started in 2015.

While your present personal experience as a junior dev struggling to get invited for interviews may feel different, the long-term global trend is clear. As more and more human activity shifts to the internet, computers, and phones, backed by software engineering to a greater extent, the demand for developers is expected to continue to rise.

The question remains, how much would the supply of "good enough" developers need to increase in order to balance out this demand? Are we talking about tens of thousands of newcomers entering the field? Perhaps hundreds of thousands? And how long would it take for them to undergo training and become "good enough," even with the assistance of ChatGPT?

Induced demand for devs

In another scenario, which I believe is more likely, there will be induced demand.

What is induced demand? If an additional lane is added to a congested highway, it may initially seem like it would reduce congestion. However, as the paradox of induced demand describes, making commuting by car easier, more accessible, and more comfortable can actually lead to more people using cars and utilizing the new lane. Similarly, if cycling paths in a city are unsafe or scarce, they won't be widely used. As soon as you make them easy to use, you suddenly generate hundreds of cyclists who didn't exist previously.

Anyone can make music, and that is awesome. Anyone can sing, flip a few plastic buckets, or install LMMS. YouTube is filled of lessons and tutorials, making it easier than ever to learn to play musical instruments. Anyone can employ their creativity and make their own music. Does it mean there are no professional musicians? No. Do LMMS and YouTube augment everyones' abilities and make us all more ambitious? Yes!

Imagine what it took to be a photographer in the 19th century. Today, everyone has a camera in their phone, and it's common for people to capture tens, and even hundreds of photos every day. Did it make the photographer profession go away? Did employing AI in modern professional cameras make the occupation disappear? No. Does it augment everyones' abilities and make us all more ambitious? Yes!

Believe it or not, the number of professional photographers has been increasing, year over year. The US Bureau of Labor Statistics expects the number of jobs to increase 9% year-over-year for the next decade. For context, the average across all industries is 5%

With platforms like WordPress, Webflow, Wix, Shopify, and the rise of the "no-code" and "low-code" hype, people already have the ability to solve coding problems on their own without extensive coding skills. And that's good! Some don't even need that, because for their business it's just enough to have an Instagram profile with a few photos and a phone number. This didn't make devs redundant. It opened up new opportunities and we could move to more ambitious projects, such as custom development and SaaS applications, where our expertise is still highly valued.

All professionals, be it musicians, photographers, or devs, possess one important quality: They know what they're doing, and why. The ease and accesibility of taking pictures didn't lead to wedding photographers going bankrupt. Rather, it enabled ordinary people to take pictures of their butts. Or coffee cups. Or dogs.

The paradox of induced demand applies to your personal life, too. Did washing machines, robot vacuums, or smartphones make you more relaxed than your ancestors? No. It allowed you to be more ambitious. Your ancestors knew they could fit two activities into their day and wished they could manage to get five done. You can fit 20 and wish you could manage to get 50 done. Guess what happens with LLMs like ChatGPT? Let me quote Simon Willison:

As an experienced developer, ChatGPT (and GitHub Copilot) save me an enormous amount of “figuring things out” time. For everything from writing a for loop in Bash to remembering how to make a cross-domain CORS request in JavaScript—I don’t need to even look things up any more, I can just prompt it and get the right answer 80% of the time.

This doesn’t just make me more productive: it lowers my bar for when a project is worth investing time in at all.

In the past I’ve had plenty of ideas for projects which I’ve ruled out because they would take a day—or days—of work to get to a point where they’re useful. I have enough other stuff to build already!

But if ChatGPT can drop that down to an hour or less, those projects can suddenly become viable.

The fact that AI-augmented Simon is as capable as a team of devs doesn't lead to devs losing jobs. It leads to Simon being able to do more tasks that were not possible before. If building software becomes easier and more accessible, it will only make us all more ambitious.

More indie hackers

There will definitely be a shake-up in the job market though. If a company needed 100 people to do something and now only 10 are enough, this will have a significant impact. In smart companies, the remaining 90 people will be reassigned to work on ambitious projects the company didn't think could be working on before. In other companies, however, the 90 people may lose their jobs.

On the other hand, after a layoff, those people can now explore new opportunities that they probably wouldn't have even dreamed of before. Tim Ferriss' book, The 4-Hour Workweek, advises people to check their email only once a day and outsource small daily tasks to virtual assistants. Since 2007! I guess this option will now become more viable than ever, at least until the majority catches up with all the new technology.

But let's say you want to add value to this world. New tools for content creators, such as YouTube or Substack, have greatly augmented people's abilities to the point that careers such as newsletter author, podcast host, beauty influencer, YouTube video producer, or Twitch streamer now seem viable. The same trend will likely happen with startups.

Pieter Levels gained fame for showcasing how tools like Stripe make it possible to build an entire startup product as a single person. There are already communities of indie hackers and individual makers who aspire to have their own scalable business. Not just a freelance business where they sell their time for money, but a business that allows them to live an independent life, whatever that may mean to them.

If 100 employees can be replaced by 10 AI-augmented employees, then what about a single AI-augmented entrepreneur? What if one bootstrapped founder becomes as capable of delivering a product as whole teams in Silicon Valley?

Just as YouTube disrupted TV production and made it possible for anyone to have their own TV channel, AI has the potential to disrupt Silicon Valley. If access to capital or experienced employees becomes less crucial in delivering a useful product, could we see more apps emerging from unexpected places like Namibia or Ghana?

Keep your FOMO at bay

Now, you might be concerned that if you're not keeping up with all the latest announcements, you're already falling behind. But let me reassure you that the majority of people in the world are not yet using ChatGPT. Keep calm!

Next time you commute on a tram, take a look around and consider how many of the people you see might have their own sophisticated ChatGPT or Stable Diffusion promptbook. None? One? Two?

This could potentially lead to significant societal division between those who have access to AI and those who don't. Remember how we learned during the pandemic, that many families in Czechia don't even have reliable access to computers? While there may be some opportunities for AI-induced social mobility, I'm afraid it could end up like it always does - with the rich getting richer and the privileged becoming even more privileged. We should be definitely worried about that.

But don't succumb to the fear of missing out! The fact that you're reading this article likely means you're already among the top 0.1% of early adopters. If you've interacted with ChatGPT more than five times, you're probably among the top 0.001%.

Check out this weekly report on new developments in AI. Even experts in the field struggle to keep up. We're whitnessing a mass exploration of technology, which is easy to use and accessible to basically anyone, including children. However, much of what's out there is:

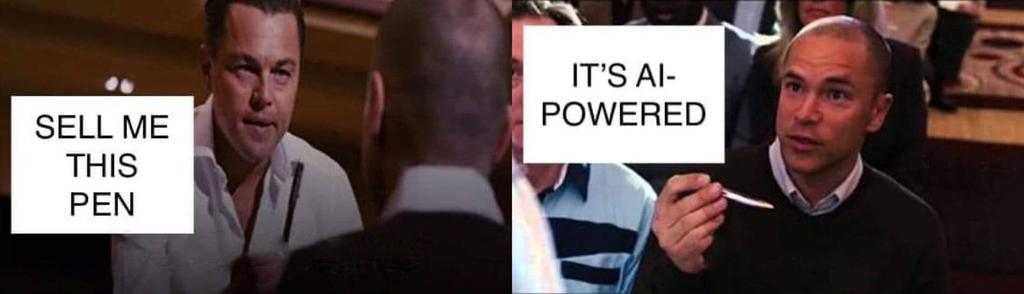

- Hyped-up snake oil selling.

- Demos that break when faced with inputs slightly more complex than those shown in a screencast.

- Not as easy to use as it appears at first glance.

Waiting for products

Most of the stuff announced also isn't a proper product. Not even the ChatGPT interface, which is officially labeled as a research preview.

I've tried experimenting with Stable Diffusion on my own machine, and I was quite surprised by how challenging it is to generate images that are even remotely as appealing as those showcased online. It's possible, but far from simple or easy. The prompting process is convoluted, and there are numerous models, techniques, and complexities to navigate. While Stable Diffusion is a powerful and free tool, it's still a raw resource.

It feels like learning how to prompt now is akin to learning how to build an engine, rather than learning how to drive a car for long-distance transportation. To effectively augment our capabilities, we need real, user-friendly products. Waiting for such products may mean missing out on the gold rush to create them, but it will make it much easier to utilize the new superpowers for other purposes.

Every day, I contemplate ways to integrate AI into my own products and workflow. If I hadn't spent so much time thinking and started working on it already, I would have been done by now. Yet, with each new announcement every week, it feels like getting the job done is becoming simpler and simpler. I've saved myself a lot of unnecessary work! While I may not be the first person to integrate AI into an existing product, I believe that if I can do it in 2023, I'll still be among the first 5%. And it's only going to get easier with time.

Checking my bias

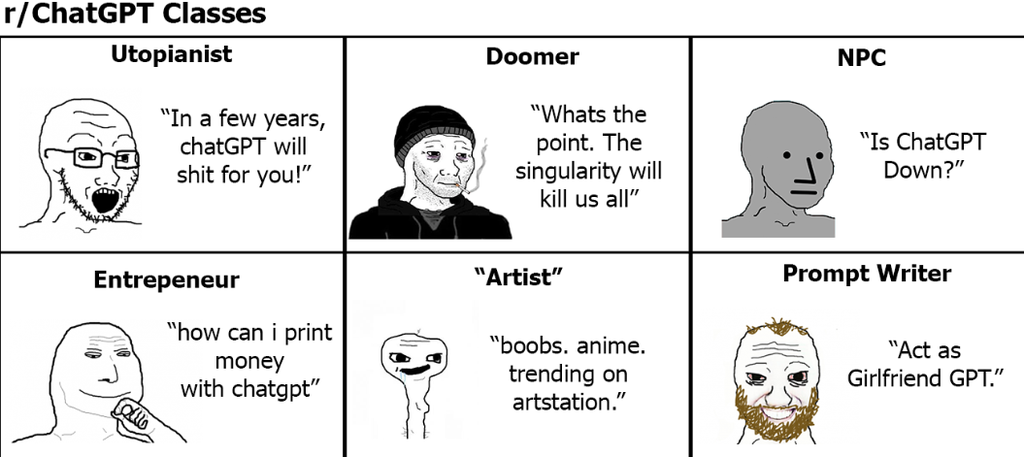

That's all, folks! Thanks for reading. Now, the only thing left is: how can I be so sure about all this? Well, truth be told, I'm not sure at all! Based on the chart below, I believe I fall somewhere between the utopianist and the entrepreneur.

I'm an indie hacker in the business of helping more people learn coding. Perhaps I'm only seeing what I want to see. Maybe I've spent several days writing this just to come up with a reasoning why AI won't render my work useless and negate everything I've been doing for decades.

This blog post is filled with various predictions, so it's likely to be outdated in about two weeks, if I'm lucky. That's also why I've decided to consolidate all my thoughts on the topic into a single post. If I were to split them, some of the individual posts could become outdated before I manage to publish them, and the whole thing wouldn't hold together anymore. This way, at least I can say this was my wishful thinking about AI in the middle of April 2023.

Thanks to Simon Willison, my internet idol, for all he does, and ChatGPT for helping me with grammar and stylistics.